A successful attack against 5G networks could disrupt critical infrastructure, manipulate sensor data, or even cause physical harm to humans. And network metadata helps the attacker.

from 7 June to 9 June 2022

BY:

Eric De Grasse

Chief Technology Officer

PROJECT COUNSEL MEDIA

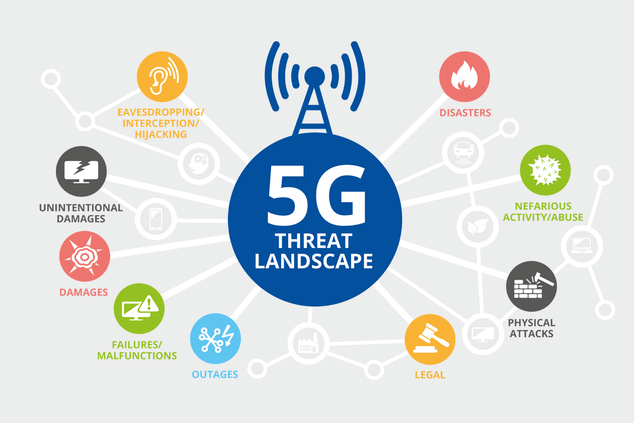

11 June 2022 – The problem with most mental models is that they treat cybersecurity as a finite problem that can be solved, rather than as an ongoing process that can only be managed. And with 5G coming down the road and its terrifying potential to exponentially raise the level of cyberattacks it will make current attacks look like paper cuts. This year at FIC2022 the dangers began to roll out.

5G security has been a topic of conversation for over 5 years, and its emerging attackvectors have been coming to the fore, bit by bit. Deloitte researchers have uncovered a potential avenue of attack targeting network slices, a fundamental part of 5G’s architecture, and they made a gangbuster of a presentation at FIC2022 this week.

The stakes are high: Not just a faster 4G, next-generation 5G networks are expected to serve as the communications infrastructure for an array of mission-critical environments, such as public safety, military services, critical infrastructure, and the Industrial Internet of Things (IIoT). They also play a role in supporting latency-sensitive future applications like automated cars and telesurgery. A cyberattack on that infrastructure could have significant implications for public health and national security, and impact a range of commercial services for individual enterprises.

At the heart of any 5G network is a flexible, IP-based core network that allows resources and attributes to be assembled into individual “slices” — each of these network slices is tailored to fulfill the requirements requested by a particular application. For instance, a network slice supporting an IIoT network of sensors in a smart-factory installation might offer extremely low latency, long device battery life, and constricted bandwidth speed. An adjacent slice could enable automated vehicles, with extremely high bandwidth and near-zero latency. And so on.

Thus, one 5G network supports multiple adjacent network slices, all of which make use of a common physical infrastructure (i.e., the radio access network, or RAN). Deloitte collaborated on a 5G research project with Virginia Tech University in the U.S. to explore whether it was possible to exploit 5G by compromising one slice, then escaping it to compromise a second. The answer to that turned out to be yes. Said a presenter:

“Throughout our journey with Virginia Tech, our objective was uncovering how to make sure that appropriate security is in place whenever a 5G network is put in for any type of industry or any customer. We saw network slicing as a core area of interest for our research, and we set about discovering avenues of compromise.”

For a brief view of how how network slicing works here is a short video from the Mobile World Congress a few years ago:

ABOVE: Brandon Walsh of the Finnish company Cloudstreet on “network slicing”. One of the cooler features of 5G is that it lets you split out dedicated capacity for particular use cases – so-called ‘network slicing’.

Achieving lateral movement via network slicing

Abdul Rahman, associate vice president at Deloitte, notes that attacking one slice in order to get to a second could be seen as a form of container escape in a cloud environment — in which an attacker moves from one container to another, moving laterally through a cloud infrastructure to compromise different customers and services.

“When we look at the end-to-end picture of a 5G network, there’s the 5G core, and then the 5G RAN, then there are the end devices and the users after the end devices. The core has really evolved to a point where a lot of the services are essentially in containers, and they’ve been virtualized. So there may then be a similar [attack-and-escape] process where we’re able to influence or affect a device on network slice two from a device or a compromise within network slice one.”

The research uncovered that an initial compromise of the first network slice can be achieved by exploiting open ports and vulnerable protocols, he explains. Or, another path to compromise would involve obtaining the metadata necessary to enumerate all of the services on the network, in order to identify a service or a set of services that may have a vulnerability, such as a buffer overflow that would allow code execution.

NOTE: at the end of this post we have a brief tutorial on how network metadata makes systems vulnerable to cyber attack.

Then, to achieve “slice-escape,” there are capabilities in the wireless space to emulate tons of devices that can join networks and start causing some stress on the core network. It’s possible to bring in some scanning capabilities to start exploiting vulnerabilities across slices.

A successful attack would have a number of layers and steps, and would be non-trivial, Deloitte found — but it can be done.

From a real-world feasibility perspective, it’s really dependent on how much money is spent. Cyberattackers would likely make an ROI calculation when weighing whether an attack is worth the time and expense. It’s about how serious and hardened the network is, if it’s a mission-critical network, and how serious the target application is. Is it an application for, say, shelf replenishment or cashierless checkout, or is it a military or government application?

If the attacker is a well-funded advanced persistent threat (APT) interested in mounting destructive attacks on, say, an automated pipeline, the approach would be more convoluted and resource-intensive.

So this sets the stage for a bad actor that utilizes advanced recon and surveillance-detection techniques, to minimize on the blue side being seen. You utilize observation to determine avenues of approach and key terrain, while ensuring concealment. If we’re going to recon a network, we want to do it from a place where we can scan the network and obfuscate our reconnaissance traffic amongst all the other traffic that’s there. And they’re going to build this network topology, aka an attack graph, with nodes that have metadata associated with enumerative services around what we would like to attack.

Real-World Risk

When it comes to potential outcomes of a successful attack, the presenters used the example of a campaign against an industrial sensor network for a smart-factory application:

“Ultimately, we can deploy malware that can actually impact the data that’s gathered from those sensors, whether it’s temperature, barometric pressure, its line of sight, computer vision, whatever that may be. Or it may be able to occlude the image or maybe only send back a portion of the results by manipulating what the sensor has the ability to see. That could potentially cause false readings, false positives, and the impact is huge for manufacturing, for energy, for transportation — any of those areas that depend on sensors to give them near-real-time outputs for things like health and status.”

The Internet of Medical Things (IoMT) is another area of concern, due to the ability to directly impact patients using remote health services such as kidney dialysis or liver monitoring, or those who have a pacemaker.

NOTE: the IoMT is the collection of medical devices and applications that connect to healthcare IT systems through online computer networks. It has become its own “practice group” for the cybersecurity, IT, legal and software development industries. Medical devices equipped with Wi-Fi allow the machine-to-machine communication that is the basis of IoMT. IoMT devices link to cloud platforms such as Amazon Web Services, on which captured data can be stored and analyzed. IoMT is also known as healthcare IoT.

Examples of IoMT include remote patient monitoring of people with chronic or long-term conditions; tracking patient medication orders and the location of patients admitted to hospitals; and patients’ wearable mHealth devices, which can send information to caregivers. Infusion pumps that connect to analytics dashboards and hospital beds rigged with sensors that measure patients’ vital signs are medical devices that can be converted to or deployed as IoMT technology.

As is the case with the larger Internet of Things (IoT), there are now more possible applications of IoMT than before because many consumer mobile devices are built with Near Field Communication (NFC) radio frequency identification (RFID) tags that allow the devices to share information with IT systems. RFID tags can also be placed on medical equipment and supplies so that hospital staff can remain aware of the quantities they have in stock. 5G networks have accelerated this development – and have opened it up to even higher levels of vulnerabilities.

There’s also another form of attacks that involve deploying malware on vulnerable IoT devices, then using them to jam or flood the air interfaces or take up shared computational resources at the edge. That can lead to denial of service across slices since they all share the same RAN and edge computing infrastructure, Deloitte found.

Defending against 5G network-slicing attacks

When it comes to defending against attacks involving network slicing, there are at least three broad layers of cybersecurity to deploy, the researchers note:

1. Convert threat intelligence, which consists of indicators of compromise (IOCs), into rules.

2. Use artificial intelligence and machine learning to detect anomalous behaviors.

3. Implement platforms that contain standard detection mechanisms, filtering, the ability to create automation, integration with SOAR, and alerting.

It’s important, as ever, to ensure defense in depth. And I am reminded of something cybersecurity maven Steve King once told us:

“The rules have a shelf life. You can’t totally depend on rules because they get aged off because people create malware variants. You can’t totally depend on what an AI tells you about probability of malicious activity. And you can’t really believe in the platform because there may be gaps.”

Much of the defense work also has to do with gaining a view into the infrastructure that doesn’t overwhelm defenders with information. The key is visibility because when we look at 5G, there’s massive connectivity: a lot of IoT, sensors, and devices, and you also have containerized deployments and cloud infrastructure that scales up and down and gets deployed in multiple zones and multiple hybrid clouds, and some clients have more than one vendor for their cloud. It’s easier when we don’t have a lot of slices or we don’t have a lot of device IDs or SIM cards or wireless connections. But there are potentially millions of devices that you may have to look at and correlate data for.

There’s also ongoing management to consider, since the 5G standard is updated every six months with new features. As a result, most operators are still scratching the surface on the amount of work they have to put into shoring up security for 5G networks, the researchers say, noting that the workforce shortage is also affecting this segment. And that means that automation will be required to handle tasks that need to be done in a repeatable manner.

And that’s the problem. Because automation from a source perspective can go out to these devices and reconfigure them on the fly. But the question is, is do you want to do that in production? Or do you want to test that first? Typically, we are risk averse, so we test when we do change requests, and then we vote on it. And then we deploy those changes in production … and that takes a certain amount of time. But those processes can be automated with DevSecOps pipelines. Solving this will take some out-of-the-box thinking.

POSTSCRIPT

Network metadata: a hacker’s best friend

We covered this topic in a very detailed monograph last year, so herein just a few of dangers and risks of the tip of a very huge iceberg of sensitive information companies are exposing: the metadata of a document.

“What is it and why is it such a juicy source of information for advanced attackers?” you might ask. Well, a document’s metadata allows to collect various high-sensitive data such as usernames, software used in a company, location of file shares, etc., etc.

As part of a practical exercise last year, we showed you how you can find what metadata your company is leaking. Collecting this metadata and creating a nice Splunk dashboard gives you a completely new type of threat intelligence and situational awareness about your company’s attack surface for a very low cost.

So, what is a document’s metadata? Most of our eDiscovery and information governance readers know already know what metadata is and what it contains, how it is used to verify documents and photographs, how it is used in litigation, etc.

NOTE: next week our boss, Greg Bufithis, will post a long piece on how metadata is used to verify photographs and other OSINT data in the Ukraine War.

Every organization is producing files on a daily basis with content that is approved for publishing. There’s a catch to that: You as a company are in fact publishing more data than you think. Every time a document is created, software that was used to create that document inserts so called metadata which can be described as data about data. What does it mean? It’s not the content of the file but rather:

– What software has been used to create this file? (e.g. Microsoft Word 2019)

– What user created this file? (e.g. john.doe, u12345)

– What is the computer name where it was created? (e.g. Super Secret Lab PC N203)

– When the file was created?

– When the file was last modified?

– When the file was printed?

– The exact file path on the disk/share from which the file was exported

And much, much more.

This information is automatically inserted into the document by the software you use to create/modify the document. The amount and type of information inserted depends on the used software and its configuration. It’s rarely seen that organizations try to limit at least the spread of this information. As a result this sensitive information is exposed and makes you attractive for your adversaries and competitors to spend time in analyzing this exposed information about your business.

And so it becomes a hacker’s gold mine.

Generally, there are three types of attackers that your organization is most likely to face:

– Script kiddies

– Advanced attackers/APTs

– Insiders

Insiders are out of the scope of this article. I could write brontobytes. But if you follow any of our other cyber stories you’ll find 1000s of the cases on the damage done by insiders.

So let’s focus on the other two categories.

One of the biggest distinctions between a script kiddie and an advanced attacker is how much time they spent on the reconnaissance phase in the cyber kill chain. Reconnaissance is one of the most important phases, often determining whether the attack will be successful or not. A threat actor is mapping the battleground, looking for targets and all the available surrounding information to increase the success of the planned attack. The threat actor is commonly looking for either an asset (an unpatched server connected to the Internet) or an individual (Jane Doe from the finance department running on Windows XP that was never updated) to be compromised.

Finding vulnerable assets is done by doing subdomain enumeration, discovering internet exposed devices in your company’s IP range and running a vulnerability scan on top of it. It’s a little bit more complicated when it comes to finding an individual to compromise. This requires doing online OSINT (Open Source Intelligence), using for instance LinkedIn. OSINT often reveals a list of employees or even full organizational charts. How does an attacker find who is the most vulnerable person from the target’s organization? That’s the tricky part but the advanced levels of “crawlers” and “web scraping” and other AI tools makes it easier and easier.

We are all bombarded with industry reports about how phishing attacks made it to become the #1 vector to compromise a company. This can be done by sending an innocent looking email, attaching a Microsoft Word document with a nifty VBA macro which drops custom PowerShell RAT. With a good pretext and preparation, the target is very likely to open the attachment. Would this attack be successful? Maybe. The attacker wants to increase the success of the attack, but not by sending hundreds of those emails which will raise a red flag for the security team monitoring your company. How to do that? Here’s a brief list of what can increase the chances for a compromise and in the post-exploitation phase:

– What software is the target using? If he/she uses LibreOffice rather than Microsoft Word, sending a VBA macro wouldn’t work in that case.

– What is the operating system of the target? Exploit leveraging a vulnerability in how Windows parses TTF fonts wouldn’t work on Mac OS.

– What’s the target’s username and e-mail address? This helps with getting a foothold in the post-exploitation phase while staying under the radar.

– What is the file share where most of the company documents are stored? An attacker can plan a lateral movement once the target is compromised or just blow it off with a targeted ransomware attack.

– Which contractors are working for the target’s company? It’s known that advanced attackers sometimes choose contractors because of less strict securitymeasurements.

Now, would you publish all this sensitive information on the websites of your company for anyone to download and use in their interest? No? Well … if you follow our other cybersecurity maven Andy Jenkinson this is EXACTLY what you are doing by publishing files on your websites without removing the metadata. All of this information can be found there and we bet you don’t even know it’s there (we call it dark data). Dark data shouldn’t be published and poses a huge security risk to your company.

Also, by now you probably have heard about the European GDPR (General Data Protection Regulation), requiring you to build and maintain an inventory of your files/data. Have you included also all your publicly exposed files and this sensitive data that you are publishing? Follow Andy and you’ll see case after case after case of companies not doing what they need to do.

This is the type of threat intelligence that your security team should be collecting. Buying TI from vendors about all the APT actors with their IOCs is cool, but it costs tons of money and most of it will never appear in your environment anyway. We recommend that you focus first on understanding how you are perceived by your adversaries, what the attack surface of your company is, so you know at least what you need to protect and keep a very close eye on.

Avoiding situations where your company’s attack surface is leaking a list of sensitive usernames screaming “I’m running on Windows XP because service desk is lazy to upgrade my laptop to something more secure”.

To conclude, a few points from Andy:

The sheer volume of metadata generated by the network is staggering and comes in a variety of forms, from DNS queries (DNS is the Domain Naming System, the phonebook of the Internet; without it there is no internet) and firewall or proxy access logs to even lowly spam messages – the metadata attached to each one of these sources represents a pixel of threat intelligence. Just as an isolated pixel in a digital image by itself won’t tell you very much, once you collect, correlate and organize all these pixels, a picture will begin to come into focus – one that can help answer the most important question for today’s cybersecurity practitioner: have we been compromised?

There are many insights from network metadata I could detail but just two points:

1. Many of the most persistent strains of malware will automatically scan a network looking for accessible hosts from which to escalate their privileges and in response, the firewall will either block or drop the connection. When the firewall drops a lot of different connections from a single source host that’s usually a pretty good indication that an intruder is already inside and scanning the network to identify lateral openings. In most instances, the adversary will opt to use DNS because it provides the attacker with greater flexibility and can persist undetected for a longer period of time. However, if an attacker is deprived of this option they will instead default to an IP-based communication method, making it all the more important to incorporate this source of metadata.

2. Once an attacker has gained a foothold in the network, they will use the network itself to move laterally in order to achieve the goal: the exfiltration or encryption of host data. This is why I say this is the crucial phase of an attack, specific and distinct types of network traffic are being generated, leaving the attacker vulnerable to discovery. However, because the network is a noisy place, identifying and isolating these markers can be especially tricky. Analyzing netflow metadata can provide important clues as to how they are traversing the network, providing network defenders with valuable insight into how and at which point an attacker is attempting to escalate privileges or propagate their payload. They have the advantage.

Yes, moving to a cloud-native architecture for your enterprise applications can deliver tremendous business value, adding scale and agility while off-loading onerous tasks like patching and upgrading server infrastructure.

However, in every cloud environment, whether Amazon Web Services, Azure, or Google Cloud, there is a new category of risk. Cloud-native threats stem from the new context and configuration requirements and network metadata you have in a cloud environment. Default settings like public access to storage objects have left sensitive data out in the open, easy to steal by anyone crawling for these weaknesses.

It’s easy to make mistakes in a new environment, with new settings introduced continuously as new capabilities are added by cloud providers. The configuration of your cloud environment is always your responsibility. AWS and others have no control over how you use their services. They are but a template for you to build from.

So not understanding the outcome of your configurations and how you build cloud-native applications can have catastrophic consequences, and understanding network metadata falls on your shoulders. Read, follow and learn from experts like Steve King and Andy Jenkinson linked above.