Feed machine learning data sets with the darkest parts of the web and … 😱

BY:

Cassandra Este

Social Media Analyst

PROJECT COUNSEL MEDIA

29 September 2022 (San Francisco, California) – When I was working toward my artificial intelligence degree (completed about 7 years ago), I had a professor who said “if you feed machine learning data sets with the darkest parts of the web, you’ll create a monster”. We did not do that as part of of degree program. But he was right.

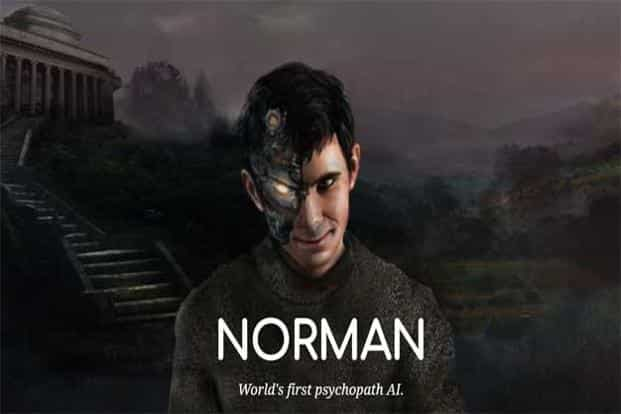

As if we needed to be convinced, scientists at MIT conducted an experiment that highlights the importance of machine learning data quality. Various media reports have noted that a “Psychopath AI” was created by scientists at the MIT Media Lab which was created by feeding into its machine learning program content from darkest corners of the web. The demented AI is aptly named Norman (as in Psycho’s Norman Bates) and has been in development for a number of years. The link to the full MIT report is here.

A recent BBC Science program on Norman noted:

“The aim of this experiment was to see how training AI on data from ‘the dark corners of the net’ would alter its viewpoints. ‘Norman’ was pumped with continuous image captions from macabre Reddit groups that share death and gore content. And this resulted in the AI meeting traditional ‘psychopath‘ criteria, per psychiatrists. Researchers came to their diagnosis after showing ‘Norman’ the Rorschach test. The test comprises a series of inkblots and depending on how viewers interpret them, they can indicate mental disorders. AI with neutral training interprets the images as day-to-day objects like umbrellas. However, ‘Norman’ appeared to perceive the images as executions and car crashes.”

Lovely. This horror-in-horror-out result should be no surprise to anyone who follows developments in AI and machine learning. The researchers say this illustrates AI bias is not the fault of algorithms themselves but of the data they are fed.

Perhaps, but that is a purely academic distinction as long as unbiased datasets remain figments of imagination. While some point to synthetic data as the solution, that approach has its own problems.

NOTE: earlier this year, Salvatore Nicci, one of our Technology Analysts, provided a brief tutorial on synthetic data sets which you can access by clicking here.

Despite the dangers, the world is being increasingly run by algorithms. We are unlikely to reverse course, so each development team will just have to choose which flawed method to embrace.